Yesterday I saw a zinger of an article on a topic I’ve thought about a lot in the last few years: GitOps.

From the title, “GitOps is a Placebo,” I expected a post on the good and bad of what Steve had seen from GitOps with an ultimately negative overall sentiment. However, he takes a cynical view of the entirety of GitOps saying that really it “has no new ideas of substance.”

I understand some of the points he makes around marketing hype but I do think there is some merit if we distill it down to its principles (which, as he says, are not new) and how they are applied in a set of specific scenarios with a certain set of tools and therefore communities. One thing that has been clear to me over the last couple of years is that GitOps confuses a lot of people. Some think they are already doing it by checking in code to a repo and others think they can sprinkle in a few tools to solve all of their CD problems.

I’ll try to give my view of what GitOps is and assess some of its pros and cons. I’ll also try to give a bit more insight into what it looks like to:

- Implement GitOps in the context of the CNCF (Kubernetes, Flux, Argo, etc.)

- Use a GitOps pattern, which, as Steve mentions, has existed in many places for many, many years as Continuous Delivery and DevOps patterns

“GitOps” is coined

Let’s start with a little bit of the origin story…

“GitOps” was coined as a term for a set of practices that can be used to continuously deliver software. This term was first used by Alexis Richardson in 2017 as a way to define the patterns and tools the teams at Weaveworks used to deliver software and manage their infrastructure. Namely, they used declarative definitions of infrastructure via Terraform and applications via Kubernetes YAML that are versioned in a Git repository branch, then submitted for approval and applied by automation.

Kubernetes configs were applied by a tool they built, called Flux, that would poll a Git branch and ensure that any changes to the repo were realized in their clusters. The team took things one step further after deployment to mitigate config drift. They used tools (kubediff and terradiff) to check the configuration in their repos and compare it to their running infrastructure. When a discrepancy was found they would get alerted (via Slack) to out-of-band changes to the system so they could rectify them.

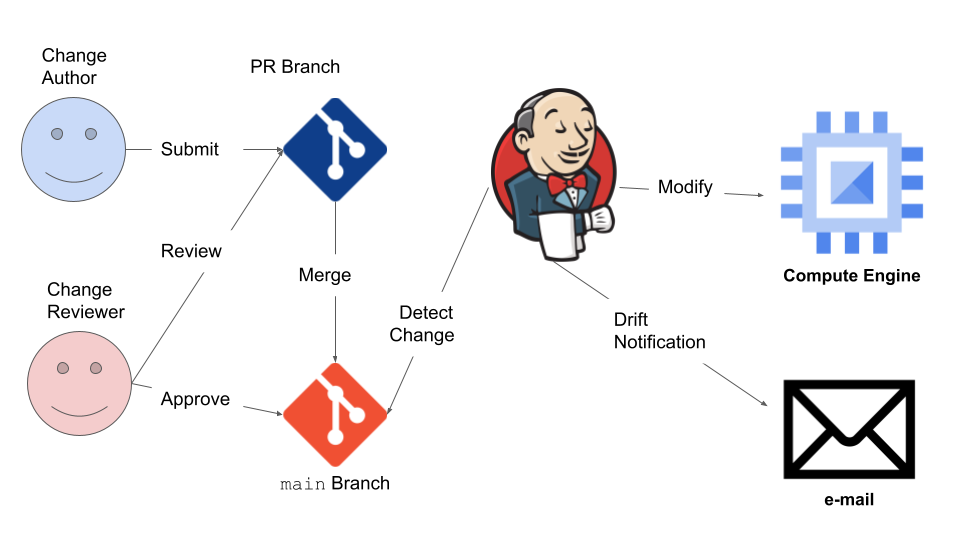

The diagram below shows what the implementation of the pattern and tools looked like at Weaveworks in 2017. They used Flux as an “Actuator” to apply config to Kubernetes APIs and kubediff as a “Drift Detector” to notify in Slack when drift occurred.

If you abstract away the specific tools used you see a pattern like this:

The evolving GitOps tool landscape

GitOps tools have evolved since 2017 with many more vendors implementing the “Deployment System” box and the creation of new sub-systems that aid with things like managing configuration (Helm/Kustomize/etc) and canary releases. One big change in the architecture of the pattern is that the “Actuator” and “Drift Detector” components have been unified into a single system that does both tasks. Additionally, drift detection has been extended to remediation where if config drift is detected, the “Deployment System” resets any changes to what is stored in the Git repository.

To that end, we can simplify things in our pattern and say that a GitOps “Deployment System” has the following attributes:

- Polls a Git repo to detect changes

- Mutates the infrastructure to match the committed changes

- Notifies or remediates when config drift occurs

What are the underlying capabilities that the pattern leverages?

While the term “GitOps” was new in 2017, as the initial article states: “There is prior art here.” The general pattern described above has been in active use among many config management communities through things like Chef, Puppet, and Ansible’s server components. Dr. Nicole Forsgren, Jez Humble and the DORA team have shown how the attributes above and many other key capabilities have helped enterprises ship better software for years. Side note: this content from the team is EXCEPTIONAL.

Let’s break down some of the tradeoffs of GitOps. I’ll frame these broadly in the terms used by DORA in their DevOps Capability Catalog:

- Version Control and Streamlined Change Approval – Use Git repos as a way to store the state you want your system to be in. Ensure that humans can’t commit directly to the Git repo+branch and changes must receive a review before merging.

- Pros

- Changes become transactional

- Rolling back changes can be done by reverting commits (you hope)

- Each change has an audit trail with metadata about the contents, time, author, reviewer

- Pull requests provide a place for discourse on the proposed changes

- PR can hold the status of the change as it progresses through the system

- Use existing RBAC applied in the repo for who can commit/merge/review etc.

- Cons

- Break glass scenarios can become more cumbersome

- Roll backs can be difficult

- Not all roles (infra/security/etc) are comfortable with Git

- Code level RBAC isn’t always the correct granularity or UX for deployment approvals

- Pros

- Deployment Automation – When changes are merged into the correct branch, an “Actuator” detects the change by polling for new commits. The “Actuator” decides what changes need to be made to realize the desired configuration and then calls APIs to bring the system into the right state.

- Pros

- The change authors nor the CI system need access to the APIs, only the actuator does.

- “Actuator” can continually reconcile the state of the system

- Cons

- “Actuator” has to be kept up-to-date as the platform it is deploying to evolves

- Pros

- Proactive Failure Detection – There is a subsystem in the “Deployment System”, the “Drift Detector”, which reads from the Git repo and the imperative APIs to detect whether the configuration doesn’t match the current state of the system. If there is a discrepancy, it sends a notification to administrators so they can rectify it.

- Pros

- Changes made out-of-band from the deployment system are flagged for inspection and/or remediation.

- Cons

- If multiple deployment systems have been wired up or manual changes are required, they can create false negatives.

- Pros

GitOps with Jenkins and GCE? Please Vic, no.

Okay, so we’ve broken down some of the key capabilities. Let’s see if we can take some tooling from pre-Kubernetes times to implement a similar “Deployment System”.

As most of you know, I’m quite a fan of Jenkins. Let’s see if we can use it to follow the GitOps pattern but rather than deploying to Kubernetes, let’s deploy a set of VMs in Google Compute Engine. Since Jenkins is a relatively open ended CI system and has very strong polling and Git repo capabilities, this should be a breeze.

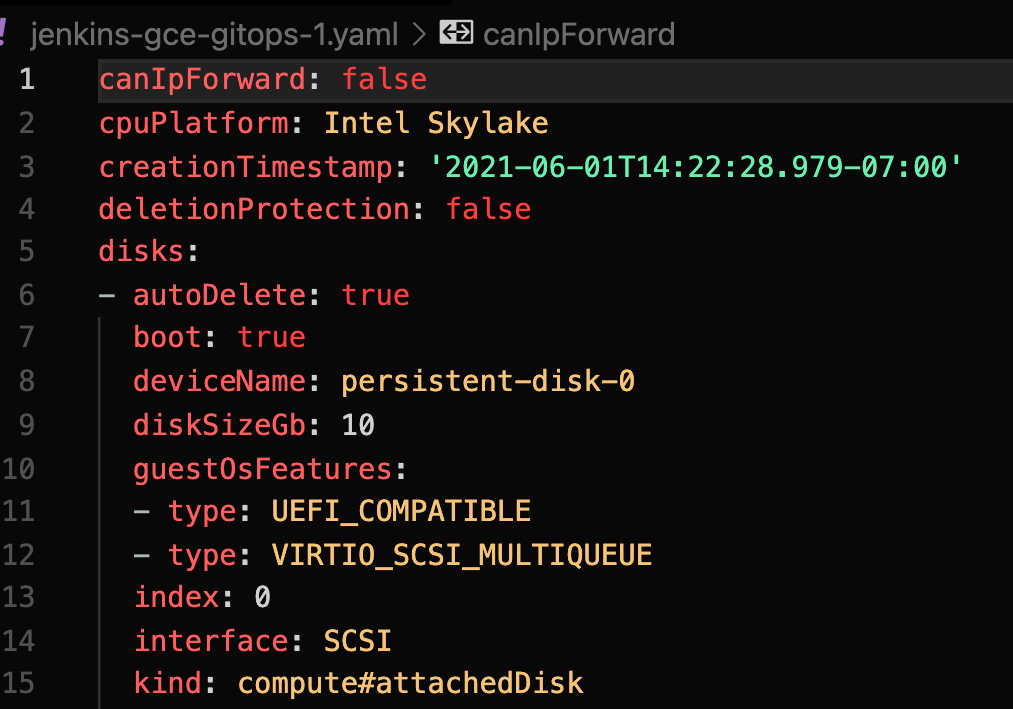

First we’ll create a GCE Instance and gather its configuration:

# First create the instance

gcloud compute instances create jenkins-gce-gitops-1

# Now export its config to disk

gcloud compute instances export jenkins-gce-gitops-1 > jenkins-gce-gitops-1.yaml

The resulting file should contain all the info about your instance:

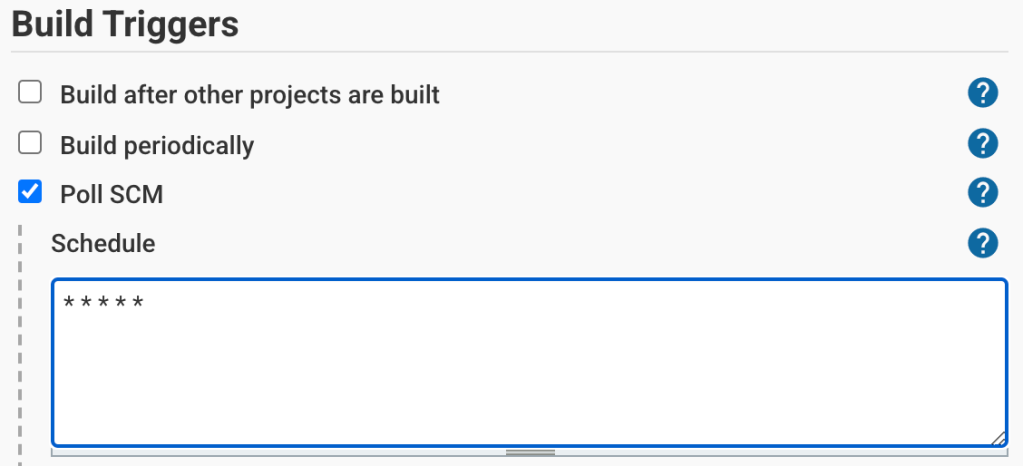

Next, we’ll create a Jenkins Job to do the work of the “Actuator” above. We’ll set it to poll the source code repository every minute for changes:

Now, we’ll write a Jenkinsfile that will take the configuration from our repo and apply it into the Google Compute Engine.

pipeline {

agent any

stages {

stage('Actuate Deployment') {

steps {

sh '''

cd instances

for instance in `ls`;do

NAME=$(basename -s .yaml $instance)

# Update instance config

gcloud compute instances update-from-file $NAME \

--source $NAME.yaml

done

'''

}

}

}

}

This script will now trigger on each commit to our repo and then update the configuration of any instances that are defined in the instances folder.

Next, we need to setup our “Drift Detector”, which will be implemented as a second Jenkins job. Instead of polling the Git repo every minute, I’m going to have it run every minute regardless of whether there was a change or not.

In the case of the “Drift Detector,” we are going to write a small script to ensure that the config in Google Compute Engine matches our config in the repo. If not, we’ll send an email to report the issue.

pipeline {

agent any

stages {

stage('Detect Drift') {

steps {

sh '''

cd instances

for instance in `ls`;do

NAME=$(basename -s .yaml $instance)

# Export current config

gcloud compute instances export $NAME > $NAME.yaml

done

# Exit 1 if there is a difference in the config

git diff --exit-code

'''

}

}

}

post {

failure {

mail to: "viglesias@google.com",

subject: "[ERROR] Drift Detected",

body: "Click here for more info: ${env.BUILD_URL}"

}

}

}

With that in place we now have our “Drift Detector” doing its job. It will notify us if anything changes with that GCE instance. We’ll test this by updating a field on our instance out-of-band of the “Deployment System”, like setting the Deletion Protection flag via gcloud:

gcloud compute instances update jenkins-gce-gitops-1 --deletion-protection

Within a minute or two we should see an email from Jenkins telling us about the drift:

When we click the link it sends us to the build itself where we can see the logs showing what the config drift was:

What if we wanted to automatically remediate issues like this? In the case of the system built here, all you’d need to do is set up the “Actuator” job to run when new changes come in AND periodically (like the “Drift Detector”).

Now the “Actuator” will correct any differences in config, even if there weren’t any new commits.

Let’s take a look at the system we have built with Jenkins:

Wait a second! Jenkins, API driven VM management and e-mail have been around FOREVER!

So why is everyone talking about GitOps?

GitOps shows an opinionated way to implement a long standing pattern with a specific set of tools (flux/ArgoCD/ConfigSync/etc) for deploying to a specific type of infrastructure, namely Kubernetes.

GitOps’ opinionation was key to its adoption and broad reach of this implementation of the existing pattern within the fast-growing community of Kubernetes practitioners. This was especially important in 2018/2019, as teams were just becoming comfortable with Day 2 operations of Kubernetes, as well as starting to configure continuous delivery for their more critical applications. Weaveworks has done a great job marketing GitOps, promoting the patterns and tools, all while growing a community around both.

In summary…

Is GitOps a panacea? Definitely not but maybe it can help your team. Run an experiment to see.

Can the patterns be implemented with a plethora of tools and platforms? Absolutely!

Is there a community of folks working hard to make tools and patterns, as well as learning from each other to make them better? You betcha. Keep an eye on what they are doing, maybe it’ll be right for you one day. You may learn something that you can implement for your existing CD system.

I believe that a key to success for tools in this space is in the opinionation they provide for how you deliver software. These opinions need to be strong enough to get you moving forward with few decisions but flexible enough to fit into your existing systems. If that balance is right, it can accelerate how you build the higher order socio-technical systems that get you more of the DevOps Capabilities that you should be striving for. At the end of the day, you should let teams pick the tools that work best for them.

The underlying truth, however, is that the tools and platforms, GitOps-ified or not, are a small piece of the puzzle. Bryan Liles put it well that GitOps can “multiply your efforts or lack of effort immensely” and that applies not just to GitOps but most new tools you’ll onboard.

If you don’t have some of these key process and cultural capabilities in your organization, it’s going to be harder to improve the reliability and value generation of your software delivery practice:

If you’ve made it this far, thanks for reading my opinions on this topic!

For more, follow me on Twitter @vicnastea.

A while ago, I did a thread for GitOps practitioners to share their pros/cons. Check it out here: